Testing and Verification

Programming for Data Science

All happy families are alike, but every unhappy family is unhappy in its own way.

– Leo Tolstoy, Anna Karenina, 1878.

When Code Breaks

In this module, we learn about how to deal with broken code.

By “broken code,” we mean everything from code that does not run to code that does not meet users’ expectations.

This is a broad topic, with lots of cases in between, so let’s break it down.

In the first case, code may not work for a variety of reasons:

- It may not run at all.

- It may be “brittle” or “buggy.”

- It may be sensitive to contextual factors like bad user input or a bad database connection.

- It may run but produce incorrect outcomes.

In the second case, code may not meet the general or specific user expectations for the code.

- It may not do what is is supposed to do.

- It may perform too poorly to be useful.

- It may not add any value to a business process.

- It may be unethical or harmful.

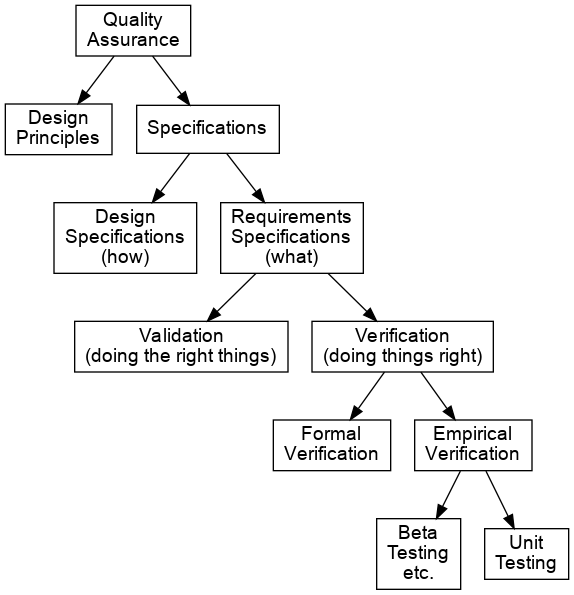

Quality Assurance

Dealing with broken code at both levels falls under the broad category of quality assurance, or QA.

Two broad appraoches to QA are through good design and through principled testing based on requirements.

Design

Good design means following sound architectural principles and patterns, as well as using the right tools (e.g. Python :-)

Some principles of good design are:

- Favor the simple over the complex.

- Invest in data design to simplify algorithm design.

- Write literate code so that it is intellible to you and others.

- Use tools that are documented and have a strong user community.

Specifications

Testing, on the other hand, depends on the codification of performance expectations, independent of how things are built.

Expectations are typically encoded in specifications: A document that contains a precise and detailed statement that stakeholders define about the properties the code must have.

There are different kinds of specification, or “spec”.

Here are two common ones:

A design specification provides exact instructions for how to build something. This goes beyond principles and identifies specific tools, platforms, etc.

A requirements specification provides exact statements about what should be built. These specify what the code should do, not how or why.

Here, we will focus on requirements.

Requirements

Requirements are elicited from stakeholders through a process that typically involves project management. We will cover this topic in more depth in a later module.

For now, assuming that we have some requirements in hand, our job as coders is to meet those requirements.

This means both designing code in accordance with the specs, and ensuring, or verifying and validating, in a shareable way that the code does in fact meet those requirements.

Verification ensures the software is built right, according to the requirements.

Validation ensures the software is doing the right thing, relative to the problem the client is trying to solve.

The distinction is similar to that referenced by Peter Drucker in this quote:

It’s more important to do the right thing than to do things right.

In this module, we focus on verification.

Requirements Testing

To verify that our code aligns with our requirements and is of the highest quality we can provide, we follow testing procedures.

This is where requirements specifications come in. They provide the blueprint for testing.

If you don’t have specifications for the product, you cannot verify that you’re doing the right thing.

It also becomes difficult to identify incorrect behavior.

Debugging the Spec

Specifications are rarely perfect. They can change over time for a variety of reasons.

In fact, some have joked that programming is the act of “debugging the spec”:

The process of interpreting what is wanted and making something sort of like it happen on a monitor is one that very much requires the kinds of interpretation Gadamer describes — in fact, the act of interpreting the original articulation generally leads to a re-formulation of the activity itself, so that programming becomes a way to change how people think of themselves and their work, not simply a reflection of the tasks at hand.

Halsted 2001

Don’t worry about who Gadamer is other than to note that he is a famous German philosopher who argued that the essence of knowledge is interpretation and interpretation always involves play.

Broadly speaking, there are two kinds of testing and verification: formal and empirical.

Formal Verification

Formal verification is the process of mathematically proving that a system satisfies a given property.

It works by defining a formal model for the system and its behavior and then comparing the software to the model.

Given their complexity, formal verification methods are not widely used.

Empirical Verification

With empirical procedures, requirements matching is demonstrated through empirical testing.

Empirical testing seeks to see if software works in practice.

It is easier than using proofs.

But it’s harder than just writing code.

We will focus on empirical testing.

Unit Testing

There are many kinds of empirical testing.

The most popular is unit testing.

In unit testing, we write code that tests the smallest possible units of the requirements spec.

The programmer does unit testing as part of the coding process.

It expects that code is componential, i.e. that the smallest units are functionally independent and can be combined in principled ways.

This raises the issue of writing well-designed functions and classes.

Two other kinds of testing are integration and acceptance (beta) testing.

Integration Testing: Test that units work together.

Acceptance Testing (Beta Testing): Give product to real users to try it out.

We will not cover these here.

Debugging

Another aspect of testing and verification which we will not cover here is debugging.

Debugging — derived from Grace Hopper’s expression “bug” — refers to the process of investigating precisely where and when code breaks.

Programming environments like Jupyter Lab and Visual Studio Code provide good tools for debugging.

A Note of Caution

Edsger Dijkstra was a famous computer scientist and A. M. Turing award winner. He said:

“Program testing can effectively show the presence of bugs but is

hopeless for showing their absence.”

— Edsger Dijkstra

Even if you write a test suite of carefully crafted test cases, and if they all run and pass, it doesn’t mean that no further bugs exist.

It is much easier to prove the existence of something than to disprove the existence of something.

This realization should motivate us to learn how to create carefully crafted unit tests so that we can test as much as we can.

Visual Summary